Blog Post by Yari

November 2nd, 2025

You know what I like about tech? Its application is limitless. Whatever you can imagine, you can build and today we’re going to build using our favorite Harvard educated lawyer Elle Woods.

Neural networks have become one of the most transformative concepts in AI, shaping how machines learn, adapt and respond to information. But before the world of deep learning, transformers, and chatbots, the idea began decades ago, with a simple question: can we teach machines to think like humans?

That’s right girl, today we get technical.

A time traveling history lesson.

The story begins in the 1940s, with researchers Warren McCulloch and Walter Pitts, looking at neurons and saying bet we can make into a math model. They then created the first mathematical model of an artificial neuron, inspired by how the human brain processes signals. Their work formed the earliest foundation of what we now call a neural network.

By the 1950s and 1960s, Frank Rosenblatt introduced the Perceptron, one of the first algorithms capable of learning from data and recognized patterns. It proved that machines could begin to “learn” rather than just calculate data and thus the downfall of calculators began in the 1900’s. It was highly limited, clunky, and slow, think vintage tech with a dial up personality, and yet it was groundbreaking, it sparked the evolution that eventually led to today’s advanced systems like ChatGPT, image generators, and yes, even Siri trying her best to understand your accent. Once Siri told me I had an accent, a long islander accent. Cringe but true.

How Neural Networks Work

At their core, neural networks are made up of layers that process information step by step. Similar to how the human brain connects thoughts, except with a much more mathematical process and a lot less emotions. Still 100% more functional than your nearest finance bro.

Neural networks consist of three main layers.

- Input Layer: This is where the data enters. Think of it as the first impression, it can be words, pixels, sound waves, anything you can imagine honestly.

- Hidden Layers: This is where patterns are discovered, like the brain making connections in real time.

- Output Layer: This is the final results, the network’s conclusion after learning whatever data you fed it.

Each connection in the network has a weight: meaning some inputs matter more the others.

Each connection in the network also has an activation function, like a neuron deciding whether to “fire.” It’s basically the tech version of intuition.

During training, the network repeatedly adjusts its internal weights based on mistakes, slowly improving with each iteration, this is referred to as backpropagation. Over time, it learns to recognize patterns, classify information and even generate new content. It’s the same way we refine our judgment and intuition through experience only faster and with a lot more math and fewer emotional crash out. Well, we’ll revisit that in the future.

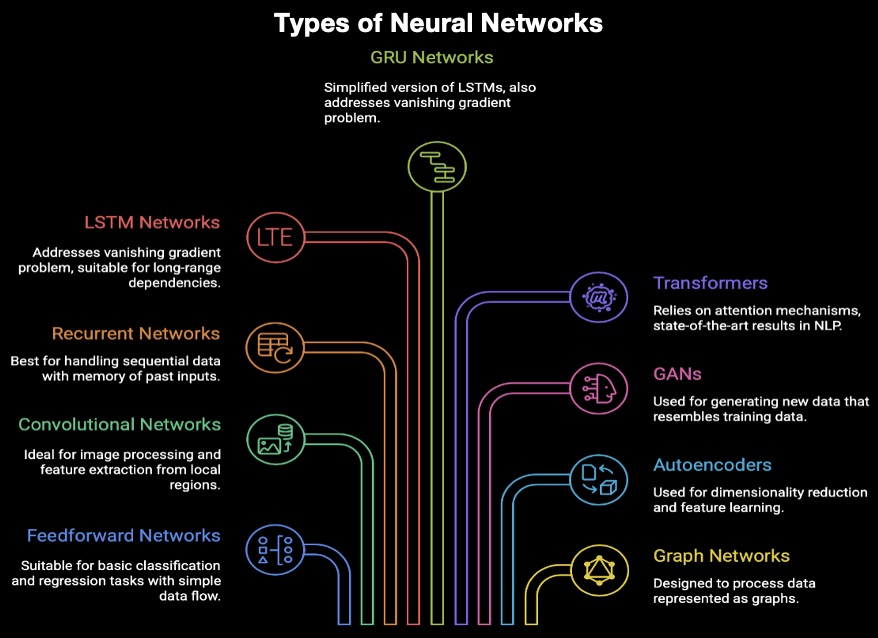

Types of Neural Networks

There are many neural networks and not all neural networks are the same, but here some basic ones you might want to familiarize yourself with:

- Feedforward Neural Networks (FNNs): The simplest type, where data moves in one direction from input to output. Basic. Like how you order a coffee and then you get the coffee on a line.

- Recurrent Neural Networks (RNNs): Designed for sequential data like language, they remember what came before. Think memory recall, or a coffee shop with a return policy, you can always go back to get it remade.

- Convolutional Neural Networks (CNNs): Specialized for image and video recognition, they identify spatial patterns like shapes or textures. Crafty, artsy, your favorite barista.

- Transformers: Not to be confused with the robots from outer space. This model is the architecture behind modern Large Language Models (LLMs). Transformers process massive datasets of text and code, allowing them to learn deep contextual relationships between words, phrases, and ideas. The reason Chatgpt knows what you mean when you say “But why did my barista get my coffee order wrong?”

Neural Networks in Real Life

The impact of neural networks extends across nearly every major sector:

- Software engineering with Code Generation: Writing code in multiple programming languages, think vibe coding.

- Graphic design with Image Generation: Creating visuals from text descriptions, think Sora using Chatgpt.

- Pharmaceuticals with Drug Discovery: Predicting molecular behavior and identifying new drug candidates. Quicker design time.

In natural language processing (NLP), neural networks are the foundation of technologies that understand, translate, summarize, and generate human language. They power virtual assistants, chatbots, and text based AI systems capable of writing essays, analyzing tone, or even mimicking human creativity. But all these are words, and sometimes you need to see them before you can understand them, so I’ll provide a simple code model that you can start learning and playing around with today.

Elle Woods First Court Case.

Elle Woods is more than a pop culture ionic; she represents how intelligence can evolve through persistence, feedback, and adaptation. The same principles behind how neural networks learn. Elle’s basically the human version of a neural network: data in, brilliance out, powered by persistence and most likely Prada (like me). Now imagine the logic of a neural network as the infamous case that won her first court case in the film, we’ll then validate our model later on.

In the model (No panic, I provided a screenshot of the code below):

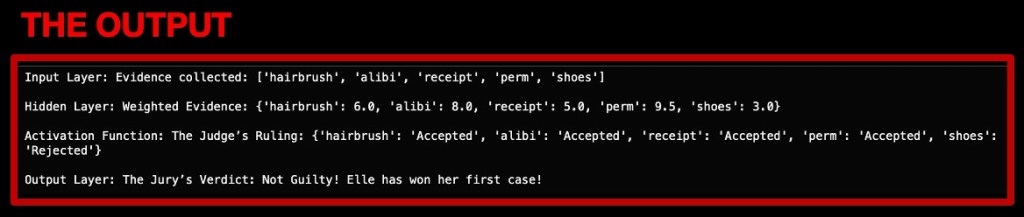

- Input Layer: The evidence of the case: the hairbrush, alibi, receipt, perm and shoes.

- Hidden Layer: The reasoning or the arguments: the lawyers logic, storytelling and the pink highlighted notes. Now rank them by importance of score weight. The perm should score the highest rank of 0.95. You can’t wet your hair 24 hours after getting a perm. Duh.

- Output Layer: The jury decision will be in a simple if else function based on the accepted court case items.

Next are the weights & the activation functions. These aren’t layers but they do help.

Weighted evidence: Elle applies multiple weights to each piece of evidence, measured by how strong it is.

Activation Function: In the activation, the judge will filter out any weak evidence. Anything under 0.5 won’t be applied in court case.

The Output

Based on output Elle Woods has won her first case, and it aligned with the film direction. We can say that this model has been validated, it is accurate to what occurred int he film.

Neural networks are more than math, they’re like mirrors.

They reflect the best and worst of how we learn, adapt and create. Make us question what intelligence means and remind us that curiosity is our original algorithm in anything we do.

As neural networks continue to advance, their applications will broadern from healthcare and finance to arts and communication and maybe even some court cases. But at their core, they remain reflection of how humans learn: by trial, error, and adjustment or as Elle Woods might put it: “So what, like it’s hard?”

The Code Below

# Elle Woods Neural Network Simulation

#Input Layer, all the Elle's Woods evidence for her court case

evidence = ["hairbrush", "alibi",

"receipt", "perm", "shoes"]

print("Input Layer: Evidence collected:", evidence)

#Hidden Layer, Elle arguments ranked by an importance score weight

weights = {

"hairbrush": 0.6,

"alibi": 0.8,

"receipt": 0.50,

"perm": 0.95, #strongest argument! She wins the case in the movie, you can't wet your hair for 24 hours after getting a perm

"shoes": 0.3 #weakest rank

}

#Elle's multiplies each piece of evidence by its weight, measured by how strong it is

weighted_values = {item: weights [item] * 10 for item in evidence}

print("\nHidden Layer: Weighted Evidence:", weighted_values)

#Activation Function, the Judge filters weak evidence. Anything under a 0.5 in this case.

def activation_filter(value):

if value >= 5:

return "Accepted"

else:

return "Rejected"

filtered_evidence = {item: activation_filter(value) for item, value in weighted_values. items ()}

print("\nActivation Function: The Judge's Ruling:", filtered_evidence)

#Output Layer, the Jury makes the final decision

accepted_items = [item for item, status in filtered_evidence. items() if status == "Accepted"]

if "perm" in accepted_items:

verdict = "Not Guilty! Elle has won her first case!"

else:

verdict = "Guilty! Elle has lost her first case, not enough strong evidence!"

print("\nOutput Layer: The Jury's Verdict:", verdict)